Let's talk about bot traffic in Google Analytics. Most of the time, bot traffic in our analytics data gets a bad name. We think of it as spam, but there are good bots too. Whether or not you welcome them, 99% of the time you don't want to see bot traffic in your analytics reports.

Key takeaways

- Bots have good and bad purposes.

- In both cases, you want to keep bot traffic out of Google Analytics.

- Google Analytics 4 blocks known bot traffic automatically.

- You need to monitor anomalies in your reports to identify bot traffic.

- To prevent skewed data, you can take measures outside and inside your GA account.

- To keep your reports clean from bot traffic, you can best implement the data quality review cycle in your workflow.

What is bot traffic, anyway?

Bot traffic includes all visits to your site that are not coming from humans. Bots are programs that are developed for good or bad intentions. In all cases you don’t want to misinterpret this traffic as visits from your target audience.

Not all bots come in peace, but for sure, there are a lot of them out in the wild. According to this study, bots generated around 47% of the global web traffic in 2022.

Take a step back and let that sink in.

In 2022, only 52% of the web traffic was done by people like you and me.

To get a good understanding of what you are up against in Google Analytics, let me briefly list the nature of the beast that can visit your site and skew your reports.

3 Examples of bad bots

Bad bots come in many forms. They can seriously harm your site in unexpected ways.

1. Scalper bots

These nifty bots buy tickets and other exclusive items online with the sole purpose of reselling them at a higher price later on. Selling out high-tickets in seconds may sound like a dream for a platform or web shop. In reality, this can burn your reputation to the ground. People don’t like to be beaten by robots.

2. Spam bots

These bots excel at trolling. They can, for instance, fill in comment sections on blogs at a higher speed than you can remove them.

3. Scraper bots

These programs steal web content at high scale. The intention is often to copy competitors and do them harm further down the road.

3 Examples of good bots

Good bots can help businesses thrive. They do tedious jobs in the blink of an eye and they can also collect tons of data that would otherwise take days or even months to collect.

1. Web crawler, spider, or search engine bot

Googlebots are the most advanced ones. They constantly scan the internet in search of videos, images, texts, links and whatnot. Without these bots, your site would not have a single visitor from organic search.

2. Backlink checkers

These programs make it possible to detect all the links a website and page is getting from other websites. They are an important tool for SEOs.

3. Website monitoring bots

These bots keep an eye on websites and can notify the owner when the website is, for instance, under attack by hackers or when it’s completely offline.

Bots. The good and the bad.

And then there is you, dealing with ugly bot traffic in Google Analytics. You don’t want to take decisions based on their visits to your site.

Why? Because bots are not real users, and they don't perform like humans. Heavy bot traffic (5% of sessions or more) can skew our data, pollute our analytics and ruin typically useful metrics like page value.

The question is: do you really need to worry?

Does Google Analytics 4 block bot traffic?

Yes, GA4 blocks known bot traffic. It uses two resources to identify good and bad bots. The first one is research by Google. The second is the monthly updated IAB/ABC International Spiders and Bots List.

I love Google Analytics… But sometimes their tool misses the mark.

Now, I've been pretty outspoken about how Google handled spam traffic. And for a long time, my take was that Google wasn't doing much to keep the spam traffic out of our analytics reports.

Google was like Fredo in the Godfather II when it came to defending our data against spam: drastically underachieving!

And the community noticed. Like many Google Analytics users, I started checking out other analytics products. Maybe the grass was greener somewhere else?

When users started threatening to move away from Google Analytics, Google took notice. Since Google Analytics 4, things have gotten better. There's been a noticeable reduction of spam traffic in our analytics reports.

But, spam is just one type of bot traffic that can pollute our analytics data. Many varieties of bots scan, crawl and even attack our websites. And sometimes we are the ones sending bots to our sites.

Example of bot traffic in GA4

One of our Google Analytics Mastery Course students noticed a big issue with bot traffic in his reports. And he wants to know how to respond to the problem.

Keith Asks:

We recently sent an email blast out using an email list that we rented. The company is well-known with a good reputation as far as we can tell. We received clicks to the site but no action once on the website and looking through GA, I see that 82% of the clicks can be located to one city in the United States. We are not even targeting that region in the US.

Here's Keith’s problem: His company sent out a targeted email campaign, and it resulted in a bunch of unwanted traffic in their Google Analytics reports. This happens from time to time.

But then something interesting happened. The geo-location for the majority of this traffic was coming from one city they don’t even target.

Keith wants to know if this is bot traffic?

It is most likely bot traffic

Here's why: Keith is getting a disproportionate amount of traffic that doesn’t even sound like it exhibits human behavior.

It's doubtful that a bunch of people in the same city sit around and click email links, and then immediately bounce off a site once they click through.

This situation clearly shows that you cannot rely blindly on GA4 to keep your reports free from non-human traffic.

You need to defend your data against bot traffic and that is a bit like playing whack-a-mole.

How to identify bot traffic within Google Analytics 4?

To identify bot traffic in GA4, you need to keep an eye out for anomalies. Look specifically for suspicious data that differs completely from the rest of your report.

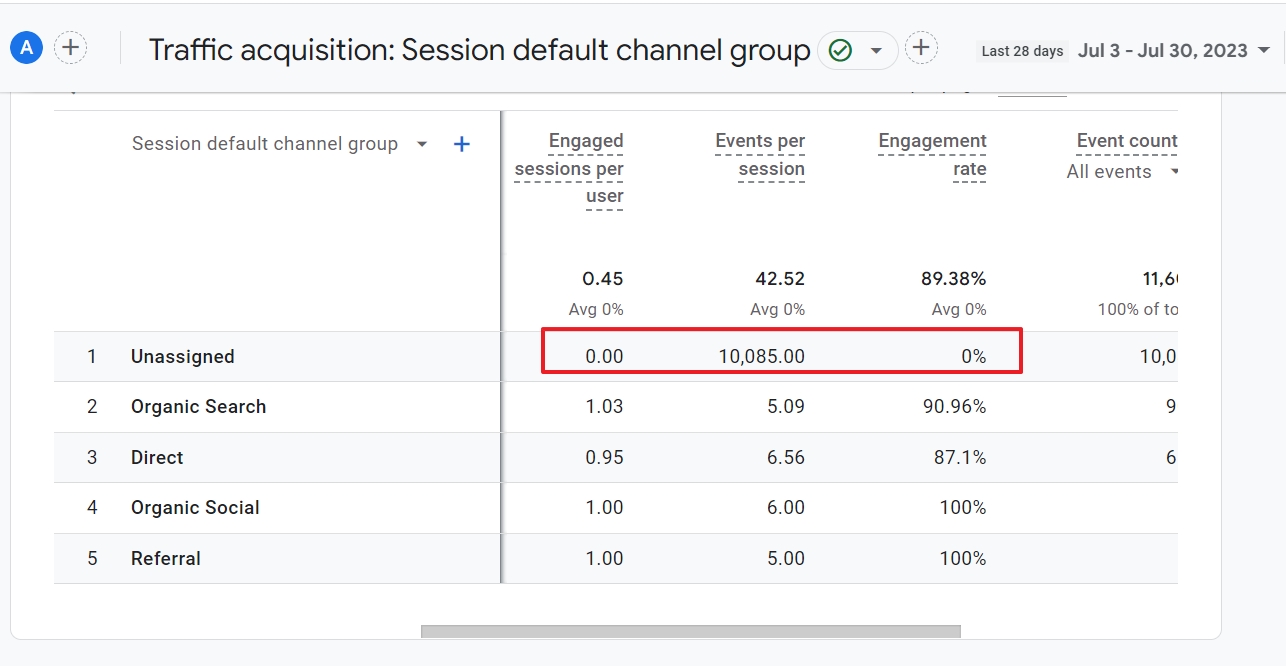

Let me illustrate this with a table that has bot traffic printed all over it: 10K events from unassigned traffic in one session and 0% engagement rate.

Let's look at how you can identify bot traffic in your Google Analytics reports. The key is to focus on metrics that help you distinguish human from robot behavior.

Unassigned or direct traffic source

Google does an attempt to assign channels to visits. You can find this in the traffic acquisition report. Bots typically access your site directly or in obscure ways. They are usually categorized as “Direct” or “Unassigned” traffic.

In the screenshot below, the low engaged sessions metrics clearly stand out from the crowd.

Peak of conversions

Conversions are the most exciting things that can happen in a GA4 account. If you have set them up wisely and correctly, they tell you if you are on the right track to achieve your business goals.

Some bots can give you false hope. They fill in forms and make you believe your site is generating leads. Some bots leave some time in between their evil deeds. Others spam you without mercy.

The thing is that you need to pay attention to any suspiciously looking data when analyzing your conversion and monetization report.

Suspicious sources and referrals

Some developers don’t make any effort to hide where the traffic of their bot is coming from. This traffic is likely to end up fast in the list of automatically blocked bots.

But some slip through the cracks and new ones appear faster than mushrooms on that slice of bread you completely forgot about.

To detect suspicious sources that lead traffic to your site, open Reports > Acquisition > Traffic Acquisition. Then change the primary dimension to Source/medium.

Look for anomalies, such as a high volume of Users and a low Average engagement time per session.

High volume of bounces

Not all bounced visits are bad, but pages with a high bounce rate can be a sign of bot traffic.

I am not talking about thank you pages, but all your pages that usually invite people to explore more of your website. One way to detect where this happens is to create an exploration in GA4 that looks similar to the entrances report but uses the bounce metric instead.

Low engagement sessions

This GA4 built-in metric is a powerful thing to detect sessions with low engagement rate, which is typical of bots. You can use this particular session metric to create an audience segment.

Then you can use it as a dimension to filter data from exploration reports.

The downside is that this doesn’t exclude sessions from humans.

Low engagement rate

All engagement metrics are useful in your battle against bot traffic. In general, humans behave differently than software programs.

You can find these metrics in many standard GA4 reports, or use them in your own custom GA4 reports and explorations.

Zero engagement time

The faster a bot can do its job on a website, the happier the owner of the bot.

Hit, collect, spam or even do a good thing and move on.

GA4 registers sessions in milliseconds. If you see sessions in your reports that lasted 0 or a few milliseconds, you may be looking at bot traffic.

Suspicious traffic from cities or regions

Don’t open a bottle of champagne when you see a sudden peak of visitors to your site from cities, regions or even countries you are not even targeting.

You can quickly find geographic data from your users in your GA4 account under Reports > User Attributes > Overview.

To sum it up: there is not one single metric that can tell you if you have bot traffic in GA or not. Your best chance to spot it, is to look at a combination of the metrics, reports and dimensions I listed above.

Then compare the data with other rows and columns of your reports. If it looks suspicious, it probably is a bot.

So, how do we keep traffic from bots out of our GA4 reports?

Nobody can guarantee that your GA4 reports will be 100% bot traffic free. To beat the bots, you need to be proactive instead of trusting Google blindly.

Why? Because you can't remove bot traffic from your analytics data after the fact. So you want to put precautions in place before this traffic becomes pervasive in your reports.

1. Install website protection software

Think of a CDN, website firewall, security plugins. Good security software can block traffic from bots that would otherwise not be recognised by GA.

2. Block bots in your robots.txt

Talk with your developers about this. The robots.txt file is an easy way to keep bots away from your site and hence Google Analytics. I would, however, not heavily rely on this option because bad bots don’t care if you allow them to visit your website.

And yet I mention this option, because bots use resources on your server. If you keep useless bots away, that can have a positive impact on your site. Don’t take my word for it. Instead, you can best analyze your site speed in GA4.

3. Filter bot traffic with IP addresses

There are many ways to detect the IP addresses of bot traffic, but you need to look outside GA. You can, for instance, ask your developers to look in server logs. Or use the IP addresses registered by anti-spam plugins.

Before you proceed, I must warn you.

Because of the GDPR and privacy concerns, GA4 offers limited options to enter IP addresses in your account. This option is meant to filter internal traffic, but you could also enter IP addresses of spammers and keep their data out of your reports.

4. Customize GA4

Depending on the symptoms of bot traffic you detect in your GA4 reports, you can do different things to filter out bot traffic from reports. They all boil down to customizing GA4. It’s impossible to give a step-by-step guide for every possible situation.

Therefore, I only point you in directions that will hopefully help you set up reports with cleaner data.

- Audience segmentation: you can, for instance, set up audiences that come from suspicious regions

- Comparisons are working as filters. They help you remove data you don’t want to see in your reports.

- Explorations allow you to combine different metrics in a dynamic report. You can use bounce rate or any of the above listed metrics that help you identify non-human traffic.

- You can customize existing reports and keep them for you, or share them with your team. This way, everyone is sure about the quality of data.

The data quality review cycle

Many Google Analytics users forget that it is not a set-and-forget tool. If you want to make data-driven decisions, you need to make sure your data is clean and stays that way.

Filtering out bot traffic is not a setting, but part of a process that looks like this:

A data quality review consists of 5 stages:

- You analyze the traffic in your reports

- Then you identify anomalies in your traffic.

- You determine the cause of those anomalies.

- Next, you implement a fix for these problems.

- Then, you document the flaws, using annotations or other records

Rinse and repeat. The cycle never ends. But that’s the same for the internet, Google Analytics and bots.

You'll have to check your reports to see if new suspicious traffic leaks in.

The waiting game is part of the life of an analyst. You're often waiting for the data to come in so that you can review results.

Analyze your traffic again – a day, a week, a month later, to make sure your solutions are working.

A good thing is that GA4 can notify you by email about anomalies that happen on your site. If something looks odd, investigate it.

Hopefully, you can apply this method to your future data quality analysis.

Closing note

It’s impossible to overestimate the amount of website traffic generated by bots. Even visits from the good ones can skew your Google Analytics reports.

It’s risky to rely on GA to filter this type of traffic because they only block visits from Known Bots. Nobody knows how many unknown bots there are out there. Your website may be the next in the queue.

Therefore, it’s important to monitor suspiciously looking traffic on your website.

If you’re working in a team or analyzing heavy-site traffic sites or web shops for customers, you need to take data quality seriously.

Integrate reviews in your workflow and look for signs of bot traffic. Once you detect them, think of the best ways to generate reliable reports.

The fact that you made it all the way down to here shows you are a human. If you're looking for more advanced Google Analytics content, check out our guide to cross domain tracking using Google Analytics.